- October 3, 2023

- By Rosan International

The Challenges of Inference on a Video Stream

Real-time video inference on edge devices is plagued by challenges including limited computational resources, power consumption, heat generation, latency, model size, data transfer constraints, environmental variability, update and maintenance difficulties, and scalability issues. So we need to be super-judicious with computational resources and focus compute on the most important parts of the stream. Currently, our algorithm takes a continuous video stream and performs inference on every frame that comes in, even if nothing is happening at all. This is extremely inefficient, leading to unnecessary battery consumption and device overheating.

We must surely be able to do better. We want to warm the planet with our smile, not our carbon footprint! So let’s see if we can find a motion detection algorithm that can be executed in real-time with minimal resources while still filtering out static video segments.

Motion Detection Algorithms

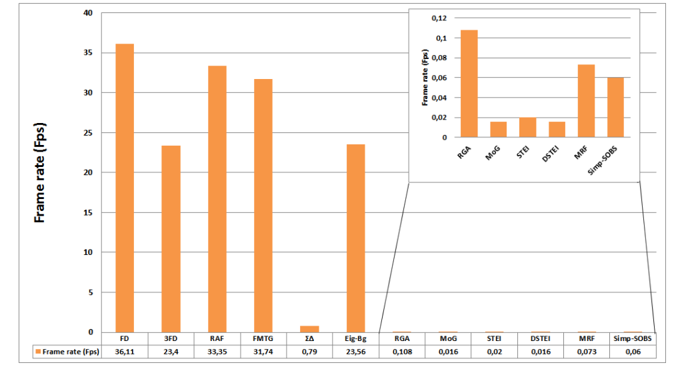

The field of motion detection has a long history and a crowded space of algorithms to choose from. Sehairi, Chouireb, & Meunier (2017) conducted one of the best comparisons we have found. Their review evaluated the following algorithms.

- Frame Differencing (FD): This technique involves calculating pixel-wise differences between consecutive frames.

- Three-Frame Difference (3FD): Extends frame differencing by comparing the current frame with the previous two frames, which helps reducing false positives.

- Running Average Filter (RAF): Adaptive background subtraction maintains a dynamic background model that adapts over time.

- Forgetting Morphological Temporal Gradient (FMTG): Morphological operation-based method that calculates temporal gradients while incorporating a forgetting mechanism to handle background changes.

- Sigma-Delta Background Estimation (ΣΔ): Employs a method inspired by sigma-delta modulation to differentiate between moving objects and the background.

- Spatio-Temporal Markov Random Field (MRF): Considers the temporal and spatial relationships between pixels to identify moving objects.

- Running Gaussian Average (RGA): Maintains a running average of pixel values over time and detects motion by comparing current pixel values with the running average.

- Mixture of Gaussians (MoG): Probabilistic approach that models pixel values using a mixture of Gaussian distributions.

- Spatio-Temporal Entropy Image (STEI): Represents motion by calculating the entropy of pixel values over time and space, helping identify regions with changing content.

- Difference-Based Spatio-Temporal Entropy Image (DSTEI): Enhances STEI by considering differences in pixel values between consecutive frames.

- Eigen-Background (Eig-Bg): Employs eigenvalues and eigenvectors to represent background information and separate it from moving objects in the scene.

- Simplified Self-Organized Map (Simp-SOBS): Simplified version of the self-organized map to cluster pixel values and distinguish moving objects from the background in a self-adaptive manner.

Choosing an Algorithm

Sehairi, Chouireb, & Meunier (2017) evaluated the performance of these algorithms using the CDnet video dataset, a benchmark including problems ranging from basic simple scenes to complex scenes affected by bad weather and dynamic backgrounds. Their results showed that there is no perfect method for all challenging cases; each method performs well in certain cases and fails in others. However, for the purposes of our use case, they found that Frame Differencing, with its simple computational approach, was the fastest algorithm, offering a particularly good performance with night video.

Understanding the Code

The snippet below contains the logic for motion detection:

private var prevFrame: Bitmap? = null

private var motionThresholdDevice = 10000000 // Calibrate threshold to device.

private var motionThresholdExternal = 500000 // Calibrate threshold to external camera.

private fun detectMotion(frame: Bitmap): Boolean {

if (prevFrame == null) {

// Initialize the previous frame on the first call.

prevFrame = frame.copy(frame.config, true)

return false

}

// Convert the current frame to grayscale for simplicity (you can use more advanced methods)

val currentFrameGray = convertToGrayscale(frame)

val prevFrameGray = convertToGrayscale(prevFrame!!)

// Compute the absolute difference between the current and previous frames

val frameDiff = calculateFrameDifference(currentFrameGray, prevFrameGray)

// Update the previous frame with the current frame

prevFrame = frame.copy(frame.config, true)

// Check if motion is detected based on device and threshold

if (inputSource == SOURCE_DEVICE_CAMERA) {

return frameDiff > motionThresholdDevice

} else if (inputSource == SOURCE_EXTERNAL_CAMERA) {

return frameDiff > motionThresholdExternal

} else {

throw Exception("Please set proper input source")

}

}

private fun convertToGrayscale(frame: Bitmap): Bitmap {

val grayFrame = Bitmap.createBitmap(frame.width, frame.height, Bitmap.Config.RGB_565)

val canvas = Canvas(grayFrame)

val paint = Paint()

val colorMatrix = ColorMatrix()

colorMatrix.setSaturation(0f) // Convert to grayscale

val filter = ColorMatrixColorFilter(colorMatrix)

paint.colorFilter = filter

canvas.drawBitmap(frame, 0f, 0f, paint)

return grayFrame

}

private fun calculateFrameDifference(frame1: Bitmap, frame2: Bitmap): Int {

val width = frame1.width

val height = frame1.height

val pixels1 = IntArray(width * height)

val pixels2 = IntArray(width * height)

frame1.getPixels(pixels1, 0, width, 0, 0, width, height)

frame2.getPixels(pixels2, 0, width, 0, 0, width, height)

var diff = 0

for (i in pixels1.indices) {

val pixel1 = pixels1[i]

val pixel2 = pixels2[i]

val red1 = Color.red(pixel1)

val green1 = Color.green(pixel1)

val blue1 = Color.blue(pixel1)

val red2 = Color.red(pixel2)

val green2 = Color.green(pixel2)

val blue2 = Color.blue(pixel2)

// Calculate the absolute difference in RGB values

val deltaRed = Math.abs(red1 - red2)

val deltaGreen = Math.abs(green1 - green2)

val deltaBlue = Math.abs(blue1 - blue2)

// Calculate the overall difference for the pixel

val pixelDiff = deltaRed + deltaGreen + deltaBlue

diff += pixelDiff

}

return diff

}Let’s break down the key components of this code:

detectMotion(frame: Bitmap): This function takes a frame (as aBitmap) as input and determines whether motion is detected. It does so by comparing the current frame with the previous frame using thecalculateFrameDifferencefunction. If the frame difference exceeds a predefined threshold (motionThresholdDeviceormotionThresholdExternalbased on the input source), it returnstrue, indicating motion is detected.convertToGrayscale(frame: Bitmap): To simplify the motion detection process, the current frame is converted to grayscale. Grayscale images contain only shades of gray (from black to white) and simplify the comparison of pixel values.calculateFrameDifference(frame1: Bitmap, frame2: Bitmap): This function calculates the absolute difference in RGB values between two frames. It calculates the difference for each pixel in the frames and sums up these differences to get an overall difference score.

Frame Differencing in Action

Next we implement the motion detection filter to our video classification model. As you can see in the gif below, the model does not receive inputs when the image is still, and thus the inference does not change. As soon as the algorithm detects a significant amount of movement, frames are fed to the model in order to update predictions for each of the three states.

One aspect that requires careful fine-tuning is the motion detection threshold. As you can see in the code above, the theshold is quite different when using an external camera or the device camera. We even saw major differences when using the device camera on the Android Studio emulator vs. an actual device. We need to do some careful calibration here though: If the threshold is too low we lose all our efficiency gains., but if it is too high, the motion detection algorithm starts skipping too many frames and inference becomes useless. The optimally efficient boundary is usually razor thin, so calibrate carefully!

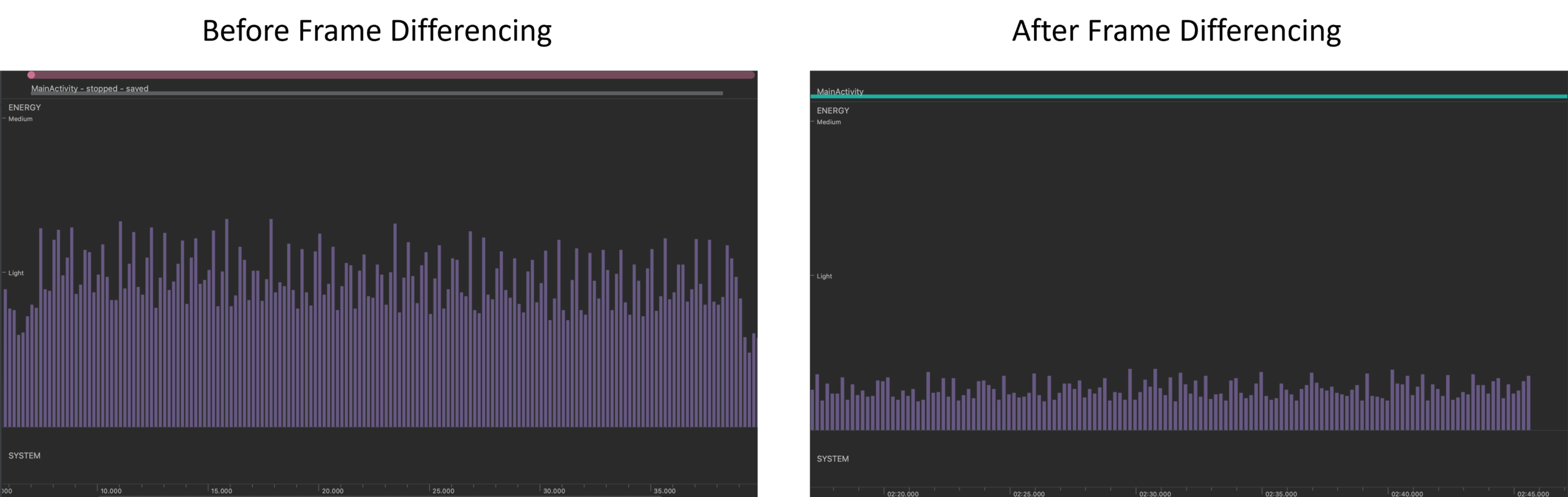

Evaluating Energy Consumption

To check the impact of the motion detection filter on energy usage, we turn to the profiling tool provided by Android Studio, which you can access as follows:

- Open your project in Android Studio.

- Connect your Android device to your computer.

- Run your app on the device in debug mode.

- In Android Studio, go to “View” -> “Tool Windows” -> “Profiler” to open the Profiler tab.

We then run the app with and without the motion detection algorithm to evaluate energy consumption on the same static video stream. As you can see below, frame differencing reduces energy consumption on the static video stream to about 25% of the baseline energy consumption.

In conclusion, the implementation of an efficient motion detection algorithm like Frame Differencing is a significant step toward reducing energy consumption and optimizing real-time video inference on edge devices. Besides our light-hearted claims to be saving the planet, the main benefit of this algo is to make the app lighter on the edge device, which will in turn reduce battery depletion and overheating.

About Rosan International

ROSAN is a technology company specialized in the development of Data Science and Artificial Intelligence solutions with the aim of solving the most challenging global projects. Contact us to discover how we can help you gain valuable insights from your data and optimize your processes.